Welcome to my Data Stack page! This is where I showcase the blend of skills, experiences, and technologies that define my journey as a data leader. Whether you’re a recruiter, potential collaborator, or simply curious, I hope this gives you a clear view of my expertise in building and executing data strategies that drive business success.

Languages of choice are, of course, R, SQL, and Python

🧠 Soft Skills

🎯 Business Strategy & Understanding

Data is more than just numbers, it’s a strategic asset that can transform businesses. My experience spans across industries, allowing me to deeply understand business needs and align data solutions with strategic goals. Whether it’s optimizing healthcare planning or driving retail growth, I ensure that data initiatives are tied to the bigger picture, creating real value.

🗣️ Communication & Negotiation

Effective communication is crucial in the data field, where complex concepts need to be accessible to both technical and non-technical stakeholders. I excel at distilling intricate data strategies into clear, actionable insights. Negotiation is another key strength, balancing diverse priorities to ensure that data solutions meet the needs of all stakeholders.

📅 Roadmapping & Strategic Planning

Creating a clear roadmap is essential for steering data initiatives towards long-term success. My approach to strategic planning ensures that every step, from data collection to deployment, is aligned with broader business objectives. I’ve successfully crafted and executed roadmaps that keep projects on track and deliver tangible results.

🤝 People Leadership & Empathy

Data leadership is as much about people as it is about technology. I foster a collaborative environment where every team member feels valued and motivated. My leadership style prioritizes active listening, mentorship, and cross-functional collaboration, bridging the gap between technical teams and business units to achieve shared goals.

🔧 Technical Skills

🗄️ Data Warehousing & Storage Solutions

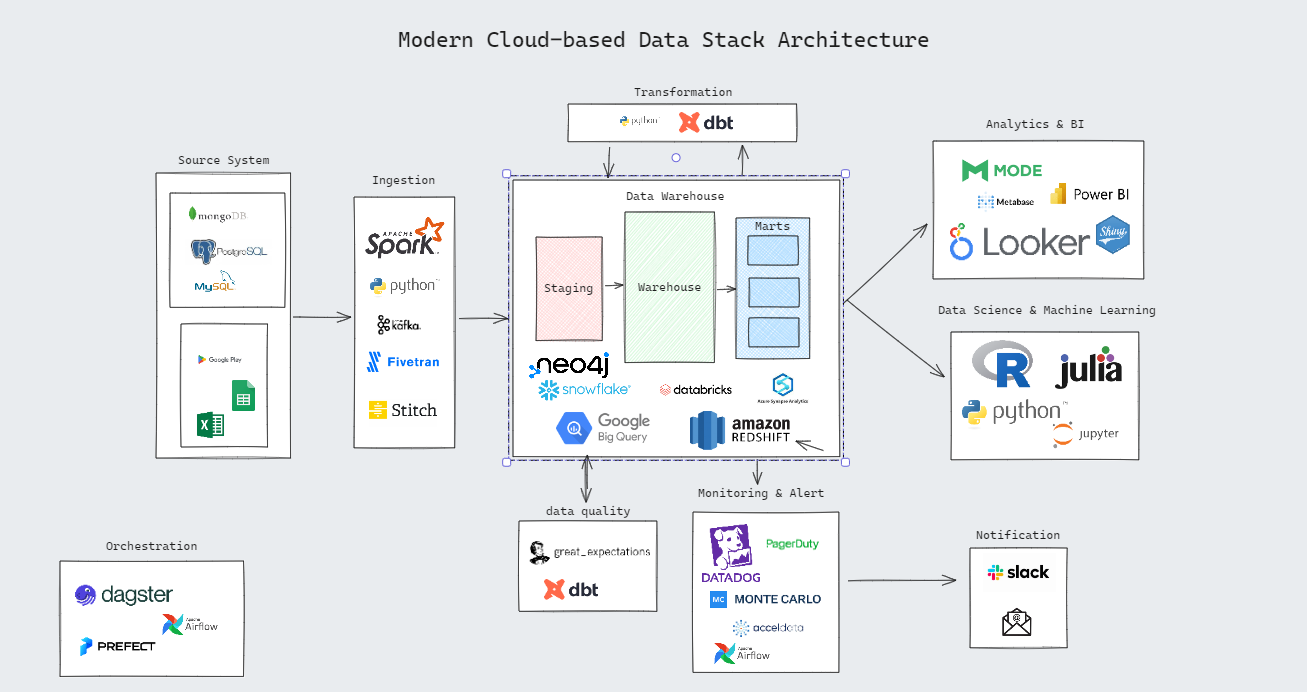

At the heart of any modern data stack is a robust data warehouse. Here’s what I’ve worked with:

- Snowflake: My preferred choice for its scalability and flexibility.

- BigQuery and Redshift: For handling large-scale data analytics.

- PostgreSQL: A reliable, relational database workhorse.

- Neo4j: Ideal for storing and querying complex graph data structures.

🔄 Data Transformation & ETL

Turning raw data into actionable insights is a critical part of the data lifecycle. I’m proficient in using tools like dbt (Data Build Tool), along with Python and R, to automate and manage ETL processes. This ensures that data is clean, consistent, and primed for analysis.

📈 Data Analytics & Modeling

From exploratory data analysis to predictive modeling, I use a variety of techniques to extract insights:

- Machine Learning: Implementing algorithms like Random Forests, Gradient Boosting, and Neural Networks to solve complex problems.

- Clustering & Classification: Segmenting data to uncover patterns.

- Natural Language Processing (NLP): Extracting insights from text data.

- Regression Analysis: Predicting outcomes and identifying trends.

🌍 Geospatial & Graph Analytics

Specializing in geospatial and graph analytics allows me to dive deeper into data relationships:

-

Geospatial Analytics: Leveraging location-based data to optimize resource allocation in sectors like healthcare and renewable energy.

-

Graph Analytics: Analyzing relationships within data, such as historical real estate pricing trends and competitor pricing analysis in retail, to uncover insights that traditional methods might overlook.

⚙️ Data Pipeline Orchestration & CI/CD

Managing and automating complex data workflows is essential for maintaining efficiency:

- Airflow, Prefect, and Dagster: For orchestrating data pipelines, ensuring reliability and scalability.

- Apache Spark: For large-scale data processing.

- CI/CD Tools: Implementing continuous integration and delivery practices to automate testing, deployment, and monitoring, ensuring that data products are always up-to-date and reliable.

📊 Data Visualization & Reporting

Conveying data insights effectively is as important as generating them. My preferred tools include:

- Tableau and Power BI: For creating interactive dashboards and reports that drive decision-making.

- Shiny (R) and Streamlit (Python): For building custom, publication-quality visualizations that engage stakeholders.

🕵🏽♂️ Monitoring & Alerting

Keeping data systems running smoothly is critical. I integrate monitoring and alerting into my workflows using:

- PagerDuty: For incident management and real-time alerts.

- Slack: For team communication and immediate notifications.

- Airflow Integration: Utilizing built-in monitoring features to keep data pipelines on track.

- Email Alerts: For ongoing system updates and non-urgent notifications.

🔐 Data Governance & Security

Data governance and security are foundational to any data initiative. I’ve implemented frameworks and policies to ensure data quality, privacy, and compliance with regulations like GDPR, safeguarding both the organization and its users.